Behind every swipe and second you spend on social media is a highly complex system trained to predict what will keep you watching, sharing and commenting just that little bit more.

These ever-sophisticated algorithms dictate more than your battery life and attention span. They have been shaping public discourse, politics and identity and influencing our relationship with the world and one another.

Arguably, they are one of the most powerful yet opaque forces, directing our social media feeds and affecting our lives.

But this era of black-box systems shaping what we see is shifting. In recent months, social media platforms and search engines have now signalled the start of a new age of information by allowing users to have more control over their feeds. This trend goes beyond minor tweaks, toggles or mutes, and it is set to grow this year.

Leading the change are new features being tested or slowly rolled out across Meta apps and on Google Search and Discover – tools that put more power in your hands.

Your Algorithm – a new way to see and control Instagram

In December, Instagram chief executive Adam Mosseri asked his followers: “Ever wish you could see your algorithm? Make some changes? Well, as of this week, you can do so on Instagram.”

He unveiled the new Your Algorithm feature, which uses artificial intelligence to show a user what topics their Reels feed is optimised to show. Users can edit or add to this list and have the option of sharing it publicly via Stories.

Mosseri said Instagram was testing this functionality on the Search tab, and that the aim was to bring it to the Home feed.

While the tool is currently only available in the United States, he said more availability is coming soon, including support in other languages. “I’m particularly excited about a world where there’s transparency and control around your algorithm”.

Dear algo on Threads

It is not the only Meta platform to venture down this track. On its sister app, Threads, the shift appears to have been the direct result of a user-led movement. Users were sharing posts jokingly asking the algorithm to show them more or less of one topic or another, starting these posts with ‘Dear Threads algo’. On December 4, Meta chief executive Mark Zuckerberg responded to this growing trend with the announcement of a new feature.

“Inspired by all of you who started 'dear threads algo' requests, we're going to test a new feature where if you post 'dear algo' it will actually put more of that content in your feed!”

Head of Threads Connor Hayes explained how the AI feature works, noting that the requested changes are temporary and public: “If your profile is public, people can see your request, connect with you about it, or repost it,” Hayes wrote. “This is just a test, so not everyone will have access now, but we're working on rolling it out more broadly soon.”

From requesting no spoilers about the Stranger Things finale to asking for curated feeds of people with a certain expertise, users have taken to the feature – even where it is not yet available.

Just ask Grok

Threads appears to have been testing these features since at least September. In that same month, Elon Musk announced that X will be powered by AI-curated algorithms and that “by November or certainly December, you will be able to adjust your feed dynamically just by asking Grok”. Essentially, it is the same concept using X’s inbuilt Large Language Model (LLM) chatbot.

This AI-powered customisation trend is also happening on YouTube, which revealed a beta version of a chatbot that allows viewers to request content they want using natural language.

“This AI-powered feature offers the ability to tell YouTube what you are interested in, allowing you to choose from pre-set options or ask for types of videos in your own words. It's a more efficient and personalised way to discover content and get recommendations based on your interests,” the platform said in its guidance on the feature, noting it is currently only available in English on desktop in the United States.

Google’s Preferred Sources and follow on Discover

Google has also been experimenting with measures that allow users to manually adapt algorithms that decide their Search results or the stories that appear in the Discover section of the app.

In August, the tech giant began testing Preferred Sources in the US and India, before releasing it worldwide for English-language users in mid-December, with a roll-out to all supported languages expected in the coming months.

The feature lets users customise their results within the Top Stories section by selecting which news outlets or digital platforms they prefer to read. Notably, Google maintains that the results are still sorted algorithmically to include results from a wide variety of domains, but can be refined to show preferred sources more often.

Add The National to your preferred sources, here.

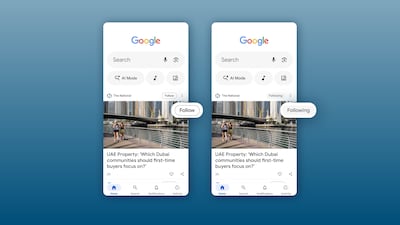

Discover, Google’s most algorithm-led surface that suggests content based on behaviour, interests and location, has also been subject to tweaks that allow more direct personalisation. Unlike the main search bar results, which are guided by user queries, Discover is designed to be a more serendipitous experience – the recommendations are created using predictive-interest modelling instead of keywords. In September, the platform began rolling out a ‘Follow’ button that appears next to story previews.

Like its counterpart, Preferred Sources in Search, tapping this button means more content from that particular website or creator. However, for the majority of the population, the feature is only available on mobile, and access is patchy for non-Android users. While Google has so far been tight-lipped about global access to Discover for Desktop, it could be something we see more widely in 2026.

Many of these features mirror or build on options used across platforms. Microsoft’s MSN allows users to follow publishers in its own desktop Discover tab, and being able to ‘favourite’ or mute accounts on Instagram and Facebook has been available for a long time. This year will highlight new and long-standing content controls across more popular apps and sites as consumer demand grows and legislation tightens.

Why this shift to allow more control?

Since the early 2010s, discovery algorithms have controlled social media feeds, heralded by Facebook’s shift away from its original EdgeRank system, which prioritised relationship strength above all else, to machine learning that instead used a complex set of signals to increase engagement and retention.

The advent of the smartphone and later the success of TikTok’s powerful For You ranking system bolstered this black-box curation as the norm across all platforms.

Long gone were the chronological updates from followed accounts - until 2022, when legislation forced platforms to add the option back into their systems. But these were all but hidden and never the default.

As Instagram chief Mosseri told his followers on Threads: “You can switch to chronological feed, but when we make it the default people use the app a lot less, and in turn average reach goes down a lot as well.”

Bluesky thinking and AI slop

After years of testing and limited public access, Bluesky, which was founded as a Twitter side project in 2019 by its then-CEO Jack Dorsey, broke through with a hybrid of the old and the new. Bluesky, with its ethos of user control, turned the default on its head.

It allows users to choose from multiple feeds, and which algorithm – chronological or discovery – runs it. By November 2024, as it exploded in popularity, technology journalist Chris Stokel-Walker billed it as “the future of social media” – a “pick-your-own algorithm era of social media”.

We are also quite clearly in the era of GenAI content, which, for better or worse, is drastically changing what we see in our feeds. In October 2025, Pinterest announced it was going to give users more control over content created by artificial intelligence, ramping this up in December by rolling it out to more content categories.

In its statement about the release, it said: “Generative AI content is rapidly transforming the internet, now making up an incredible 57% of all online material. At Pinterest, we’ve heard from our users … That's why we’re leading the way in giving users more control over their experience”.

Transparency and control by law

Regulation is the biggest behind-the-scenes factor greatly influencing this collective effort to give back control. Under the EU’s Digital Services Act (DSA), platforms with more than 45 million users must allow the option of non-personalised feeds for users across the 27 countries in the bloc.

As a result, since 2022, the large platforms have given users the option to switch to a chronological feed, but it isn’t the default. This minimal-requirement-met approach is under pressure for several reasons.

In October 2025, a Dutch court ruled that Meta had to give users more accessible options for non-algorithmic timelines, in line with regulations in the DSA. While Meta indicated it will appeal against this decision, the ruling spotlights the issue and signals the start of meaningful legal action under the DSA.

In August, the majority of the EU’s AI Act requirements, which have been rolled out in phases since 2024, will also be in place. This includes obligations for transparency.

Republican Party

In the United States, two senators recently proposed the Algorithm Accountability Act. Announcing it in late November 2025, Utah Rep Mike Kennedy said in a statement: “Social media companies have built powerful algorithms that prioritise engagement and profit, too often overlooking their role in amplifying dangerous content”, saying the act would ensure “they are accountable for preventing foreseeable harm caused by their algorithmic feeds”.

If passed, this bipartisan-led law would hold social media firms directly accountable for the effects of algorithmic feeds on their users in the US.

Liability, echo chambers and privacy – the risks of refinement

As this year unfolds and AI-powered algorithms become increasingly sophisticated, we will certainly see more of the "human in the loop". Users will have more levers to control what the algorithms serve up. But it isn’t without risks.

This shift will probably mean less clarity on legal accountability. Can social media companies be held liable for a feed that you have partly designed?

In the case of publicly visible algorithm requests, there is little doubt that marketers and bad actors will seize upon this, using the shared data to better target users for their own ends. And of course, these more detailed requests mean stronger training data for AI systems, improving those time-sapping recommendations.

The risks of creating echo chambers that narrow content exposure and deepen social divides will continue, and perhaps worsen if users can explicitly mute all shades of dissenting opinion.

Whether this era empowers users or amplifies the potential for harm, one thing is certain: 2026 is the year to start training your algorithms.